For years, the thought of what business email compromise (BEC) attacks can do to them financially and reputationally has terrified businesses. I recall working for a company that was notified by a supplier that they were impacted by a BEC scheme. It set off a panic within my company wondering if our data was compromised.

People tend to fear what is unknown, but BEC exploits what everyone finds to be normal for the individuals or companies they typically do business with. For example, a tried and true form of trickery is to set up an email that may switch ‘i’ and ‘e’ in a person’s or company’s name, changing the email destination but making it difficult for the receiver to spot. This has caused paranoia within the business community. Companies respond by mandating training on BEC schemes, providing new verification solutions and procedures, and, in some cases, introducing multifactor authentication to protect themselves. Yet, just as the companies enhanced their processes, so did the fraudsters.

The emergence of generative AI and deepfake technology

Generative AI (GenAI) has many benefits for increased productivity and learning. However, it has ushered in a new era of risk and has given fraud operators more advanced tools to aspire to bigger targets. GenAI is evolving rapidly, and so too is fraud sophistication with the tool. Attacks became more elaborate by using tools such as natural language processing and translation services to sound more realistic when requesting a change in payment details. This technology increased recruitment since anyone with a computer could now participate in a scam anywhere on the globe.

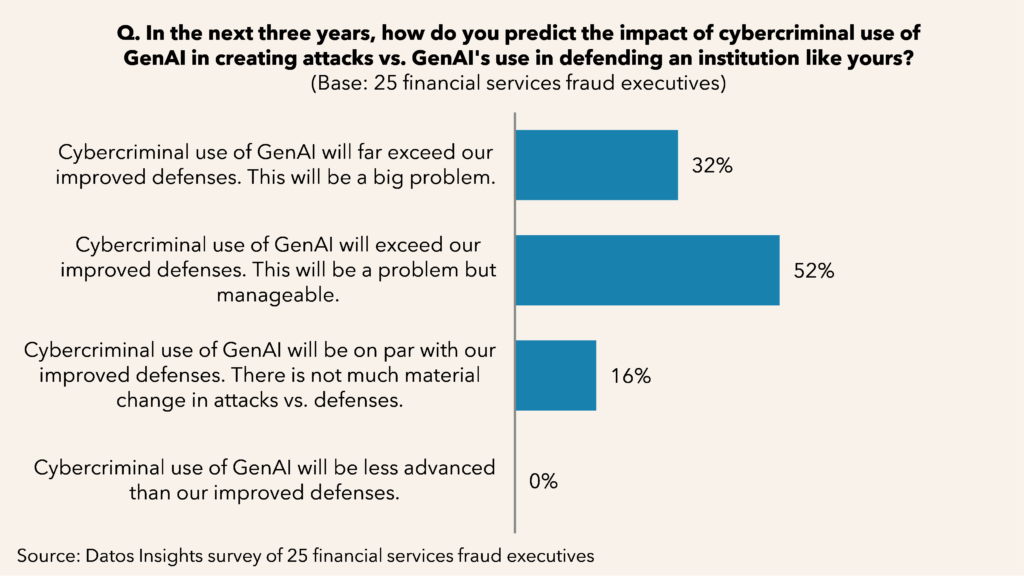

According to a recent Datos Insights survey of 25 fraud executives at FIs on the expected impact of GenAI, a combined 84% of the respondents believed cybercriminals’ use of GenAI would exceed improved defenses from their institution. Thirty-two percent believed this would far exceed improved defenses and be a big problem; the other 52% believed it would exceed improved defenses, but it would be manageable.

The emergence of deepfake technology can convincingly mimic a person’s voice, appearance, and mannerisms. Just a short time ago, the modus operandi of the cybercriminal was to initiate a string of emails or text messages that built credibility. However, as the payout increased and the time to commit the fraud decreased, this led to quicker adoption of AI tools.

News about deepfakes is increasing rapidly as more fraud operators adopt the technology. Audio deepfakes allow the fraud to contact a victim using the voice of someone they know. Typically, the call has a sense of urgency in transferring money or providing a multifactor code to process a transaction. There are many examples where a controller received a call from their alleged CEOs or CFOs requiring funds to be immediately sent to another company. A similar scheme that has garnered national attention is virtual kidnapping schemes. The reports are similar where a cybercriminal contacts a victim demanding money while demonstrating audibly they have a loved one captive.

Audio deepfakes play on the emotions of the victim. A sense of urgency to send a payment compromises the victim’s ability to reason. Fear of losing their jobs or serious harm to a loved one pushes them to carry out the demands.

Video deepfakes ushers in the next order of scamming magnitude. Recently, it was reported that a financial clerk in Hong Kong was told via video conference with what she believed to be her CFO and other financial executives to transfer HK$200 million (US$25 million). The original point of contact was a phishing email to the employee. She reportedly expressed suspicions, so the cybercriminal quickly arranged a video chat where her presumptive leadership team told her to send the funds. The investigation later uncovered that the individuals who were represented in the chat all had a public presence. The cybercriminal likely used AI to mimic them from appearance to voice.

Where do we go from here from an authentication and authenticity perspective?

How can we trust anything that isn’t standing right in front of us? Just as we have always done, we will innovate through the obstacles. AI detection tools and digital authentication may be coming soon to inform us when AI is presently being used. In the meantime, all businesses must stay informed and up to date on the latest trends cybercriminals are using. Understanding the threats allows companies an opportunity to recognize and mitigate them.

The following are key takeaways companies should consider when building defenses against BEC fraud:

- Train all employees: Provide continuous training, including email testing, which aids employees in recognizing signs of a BEC. Highlight the ways a fraud operator can contact employees within the company that are not limited to email, including employee cell phones.

- Verify and authenticate all payment and transfer requests: Implement policy and procedures to verify all requests. Institute dual controls and several points of verification.

- Leverage emerging verification tools: Commercial tools are available today to verify the legitimacy of an email address and bank account ownership. Multifactor solutions are another common tool.

Don’t wait to act; it may be too late. For more information on deepfakes and how to protect yourself, please reach out to me at [email protected] or check out our published research on current fraud trends.